Integrity is hard

So what is a Culture of Integrity?

It sounds like a bunch of fluffy words you would hear from an organizational process improvement consultant. You are probably right, but that doesn’t mean it isn’t a huge value to an organization!

At the core of anything we do there needs to be a belief that we are doing the “right thing”.

We need to know our colleagues are doing the “right thing”.

We need to know that management and executives are doing the “right thing”.

I’m “air-quoting” here because one of the most critical problems is : What is the right thing to do in a world of grey-area scenarios?

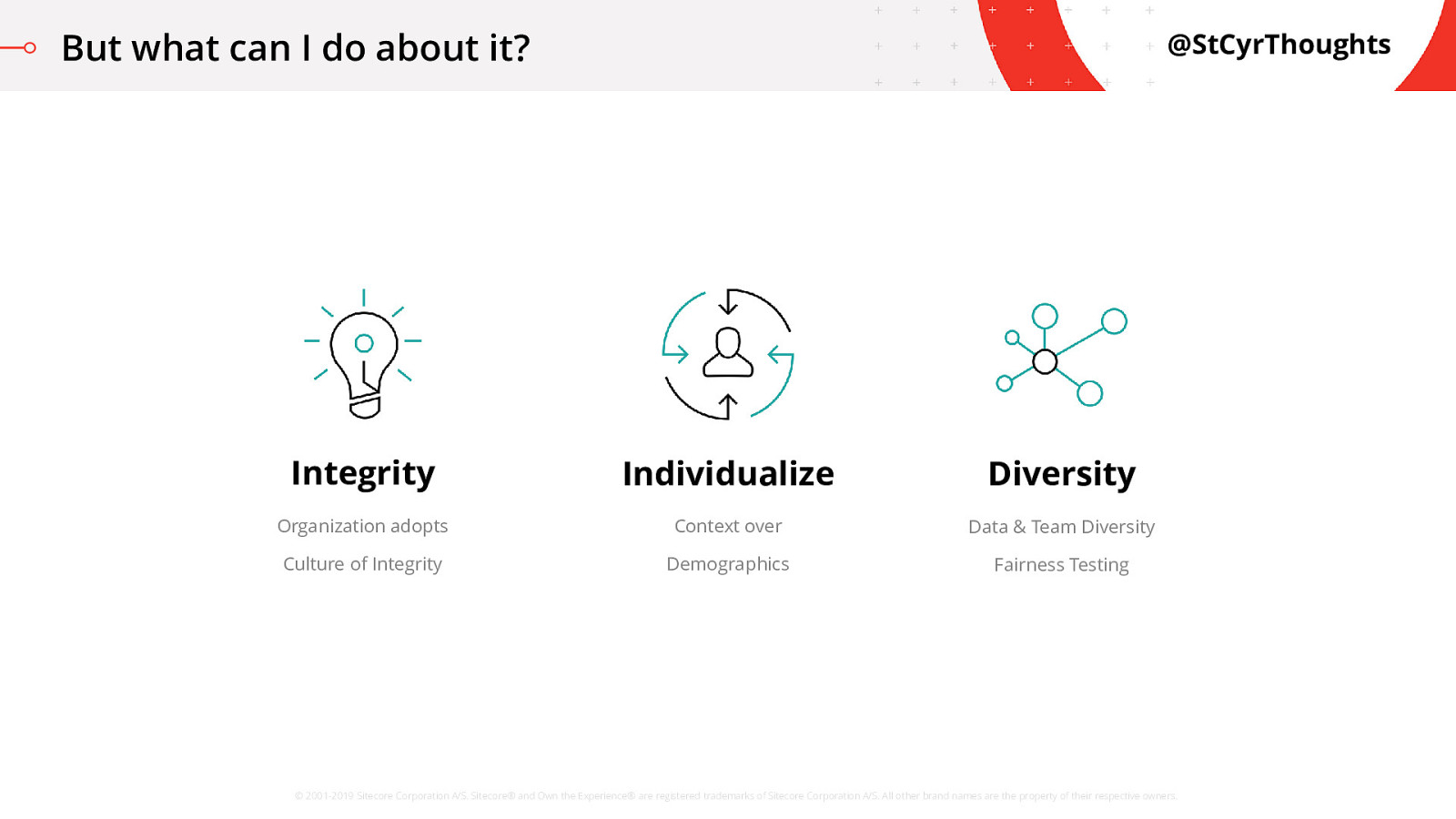

Start Learning

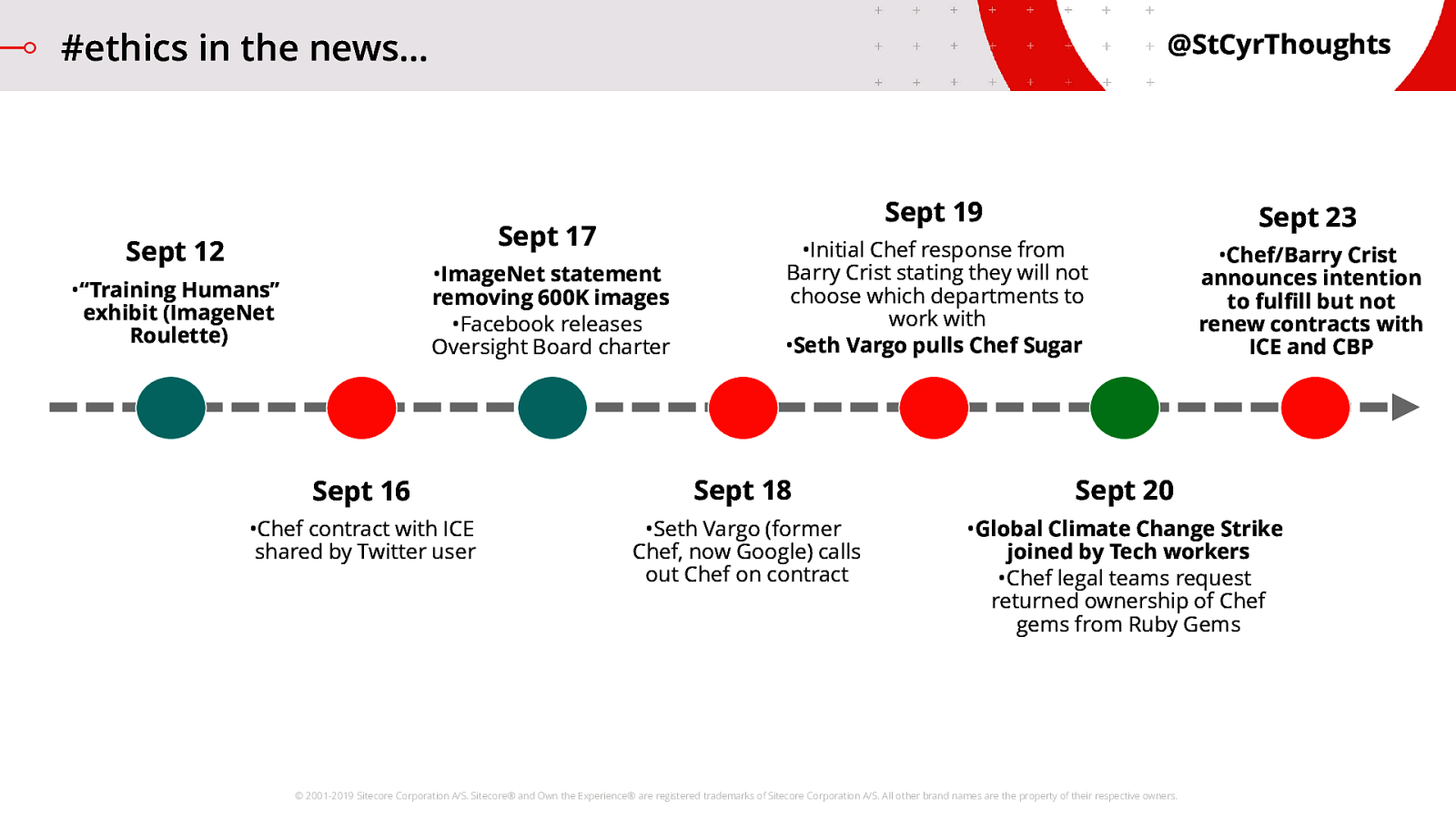

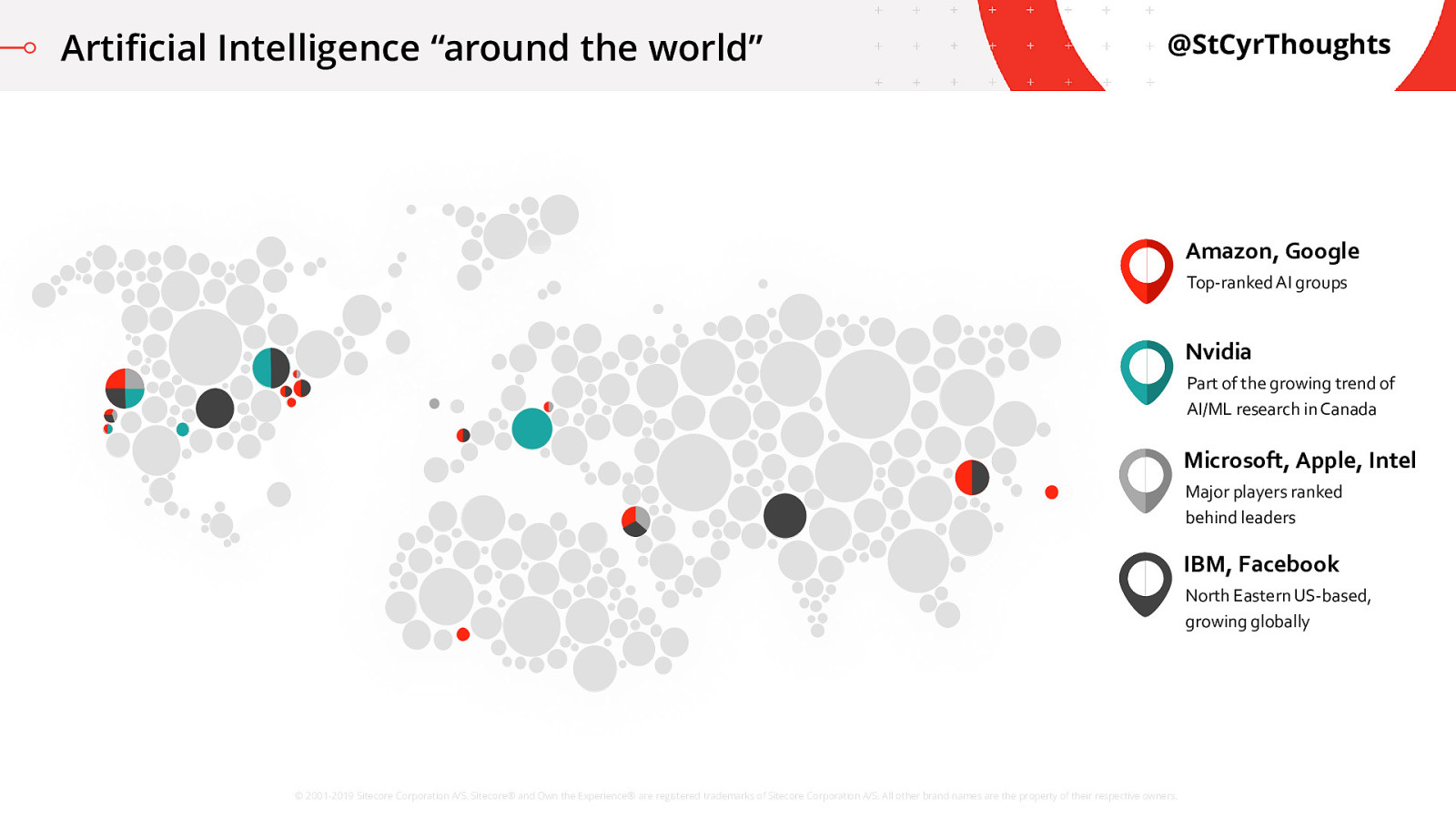

If you are wanting to be more deliberate about ethics in your organization, the first thing you need to start doing is research. A lot of it. This is not a new field and a lot of organizations have gone through this cultural transformation. You can learn a LOT from the successes and failures of others.

It takes a village

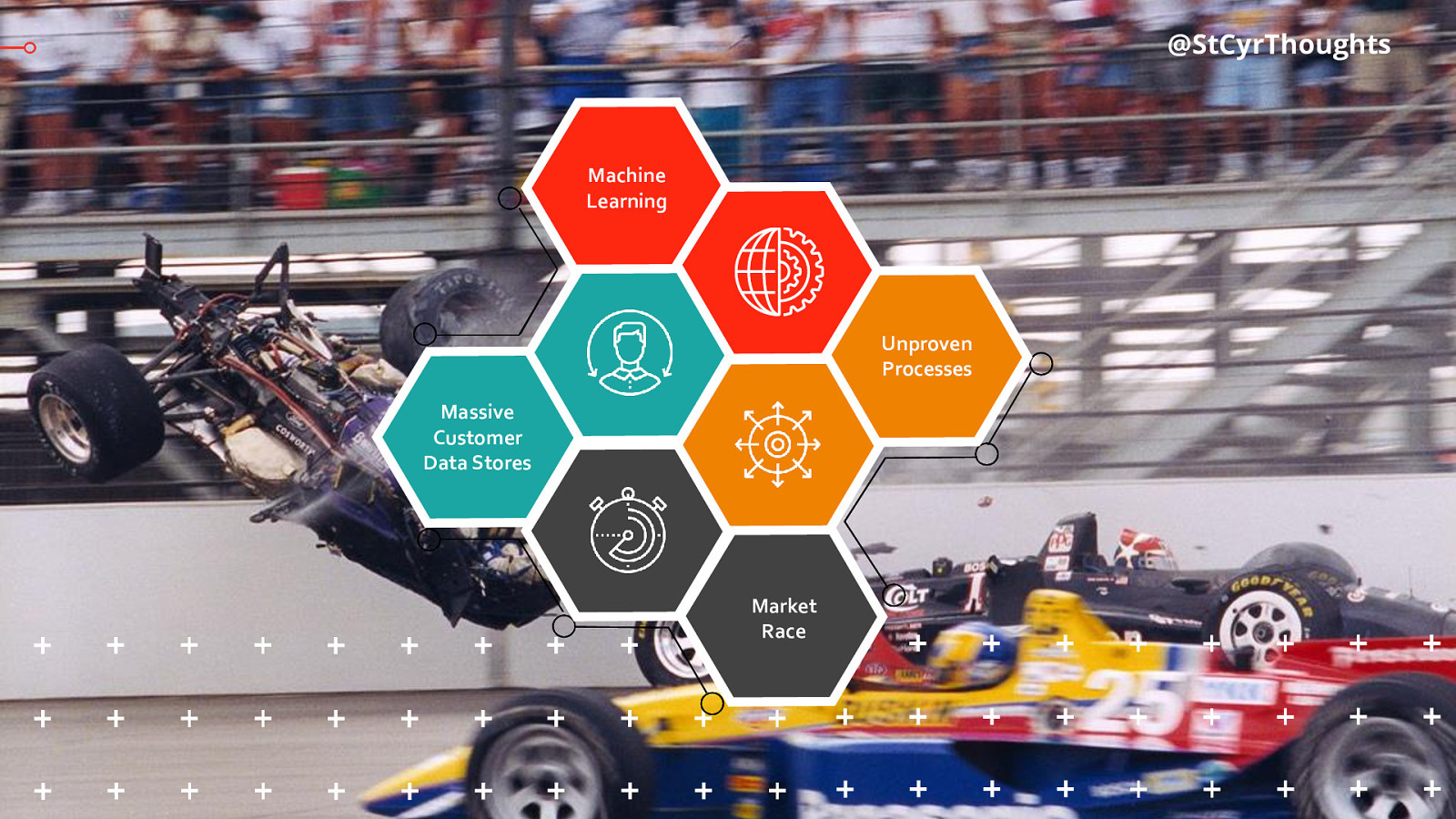

Adoption across the organization is a huge challenge. This is not unique to ethics and integrity. I have seen the same with adopting agile delivery frameworks, DevOps cultures, and Continuous Improvement practices. If we look at DevOps culture as an example, there is an inherent belief behind DevOps that we are all working together, as a single team, with a shared responsibility. This spans from idea, to launch, and continuing through the entire life-cycle of software. DevOps is a cultural shift that needs to happen throughout an organization to make DevOps succeed. The same is true for handling ethics in machine learning. Everybody needs to be involved to build a deliberate and shared culture that supports thinking about problems and solutions and whether something SHOULD be done.